Abstract

Vehicle discovery is significant for cutting edge driver help frameworks (ADAS). Both LiDAR and cameras are regularly utilized. LiDAR furnishes superb range data however with cutoff points to question ID; then again, the camera takes into consideration better acknowledgment yet with cutoff points to the high goal run data. This paper presents a sensor combination based vehicle identification approach by melding data from both LiDAR and cameras. The proposed approach depends on two segments: a speculation age stage to create places that potential speak to vehicles and a theory confirmation stage to order the comparing objects. Speculation age is accomplished utilizing the sound system camera while check is accomplished utilizing the LiDAR. The fundamental commitment is that the correlative favorable circumstances of two sensors are used, with the objective of vehicle location. The proposed approach prompts an improved discovery execution; moreover, keeps up passable bogus alert rates contrasted with vision based classifiers. Exploratory outcomes propose an exhibition which is comprehensively similar to the present status of the craftsmanship, but with decreased bogus caution rate.

Introduction

Independent robots that help people in everyday living undertakings are getting progressively mainstream. Independent portable robots work by detecting and seeing their general condition to settle on precise driving choices. A blend of a few unique sensors, for example, LiDAR, radar, ultrasound sensors and cameras are used to detect the general condition of self-governing vehicles. These heterogeneous sensors at the same time catch different physical characteristics of nature[1]. Such multimodality and repetition of detecting should be emphatically used for dependable and steady impression of the earth through sensor information combination. Be that as it may, these multimodal sensor information streams are unique in relation to one another from numerous points of view, for example, worldly and spatial goal, information group, and geometric arrangement. For the resulting observation calculations to use the decent variety offered by multimodal detecting, the information streams should be spatially, geometrically and transiently lined up with one another. In this paper, we address the issue of intertwining the yields of a Light Detection and Ranging (LiDAR) scanner and a wide-point monocular picture sensor with the expectation of complimentary space discovery. The yields of LiDAR scanner and the picture sensor are of various spatial goals and should be lined up with one another. A geometrical model is utilized to spatially adjust the two sensor yields, trailed by a Gaussian Process (GP) relapse based goal coordinating calculation to insert the missing information with quantifiable vulnerability. The reVehicle discovery is significant for cutting edge driver help frameworks (ADAS). Both LiDAR and cameras are regularly utilized. LiDAR furnishes magnificent range data however with cutoff points to question distinguishing proof; then again, the camera considers better acknowledgment yet with cutoff points to the high goal run data. This paper presents a sensor combination based vehicle identification approach by melding data from both LiDAR and cameras[2]. The proposed approach depends on two segments: a theory age stage to produce places that potential speak to vehicles and a speculation check stage to arrange the relating objects.

Literature review

Speculation age is accomplished utilizing the sound system camera while check is accomplished utilizing the LiDAR. The fundamental commitment is that the integral focal points of two sensors are used, with the objective of vehicle recognition. The proposed approach prompts an improved location execution; moreover, keeps up average bogus caution rates contrasted with vision based classifiers. Test results recommend an exhibition which is extensively similar to the present status of the craftsmanship, though with decreased bogus alert rate.sults demonstrate that the proposed sensor information combination system altogether helps the ensuing discernment ventures, as outlined by the presentation improvement of a vulnerability mindful free space identification calculation.

Camera

Cameras are a broadly comprehended, develop innovation. They are likewise dependable and generally modest to deliver. Vehicle applications that generally depend on cameras today incorporate propelled driver help frameworks (ADAS), encompass see frameworks (SVS) and driver observing frameworks (DMS). Combined with infra-red lighting, they can perform somewhat around evening time. Be that as it may, they have some noteworthy impediments as well. Truth be told, cameras frequently face huge numbers of indistinguishable restrictions from we find with the natural eye[3]. They need a reasonable focal point to see appropriately, constraining where vehicle producers can situate them, and they don’t generally give a fresh or dependable picture in awful climate especially in substantial downpour or day off. Around evening time, they’re regularly just on a par with the vehicle’s headlights, a huge hindrance to accomplishing exactness. Maybe the one essential constraint of camera innovation in giving a completely independent driving experience, is that with no human mind to make an assurance about what is being seen, cameras depend on complex forecasts from neural systems. This requires noteworthy measures of preparing and handling power, two components which are innately constrained with an in-vehicle PC framework[4].

Lidar

Lidar represents light location and going, with frameworks regularly utilizing undetectable laser light to gauge the separation to objects likewise to radar. Initially created as a looking over innovation, lidar estimates a large number of focuses to develop a unimaginably definite 3D perspective on the earth around the sensor. In a vehicle, lidar can give the most nitty gritty understanding conceivable of the street, street clients and potential risks encompassing the vehicle. Amazingly, lidar can spot protests up to 100 meters or so away, and can quantify separations at an exactness of up to 2cm. Lidar is additionally unaffected by unfavorable climate conditions, for example, wind, downpour and day off, in actuality could even be utilized to plan blocked off territories in overwhelming snow conditions[5]. Lidar innovation isn’t without its own arrangement of explicit restrictions in any case. For a beginning, it takes an enormous measure of handling capacity to decipher up to a million estimations consistently, and afterward make an interpretation of them into noteworthy information. Lidar sensors are likewise unpredictable, with many depending on moving parts which can make them increasingly defenseless against harm. Lidar’s criminological perspective on the world is pointless excess for the present ADAS needs, yet it stays a significant innovation for future driver-less vehicles, with General Motors and Waymo being striking advocates. At present, it’s restrictively costly and to a great extent problematic in the car business, yet when the innovation is prepared for the standard, its exhibition may end up being indispensable[6].

Dataset used (kitti data set)

The exploratory outcomes depend on custom information catches using the stage portrayed in the past subsection. Six datapoints covering a scope of circumstances are caught to represent various conditions. Each datapoint comprises of an information catch by the stage that moves in an orderly fashion, until it can’t continue because of an impediment in its way[7].

Proposed method

Proposed Algorithm

To address the difficulties introduced above, in this segment we propose a system for information combination. This segment portrays the proposed calculation for combination of LiDAR information with a wide-point imaging sensor.

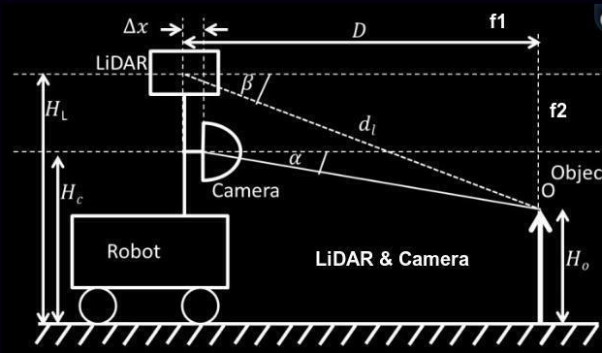

Geometric Alignment of LiDAR and Camera Data

The initial step of the information combination calculation is to geometrically adjust the information purposes of the LiDAR yield and the 360° camera. The motivation behind the geometric arrangement is to locate the comparing pixel in the camera yield for every information point yield by the LiDAR sensor.

In spite of the fact that presenting moderate requirements for adjustment, the above geometric arrangement process can’t be completely depended upon as a vigorous system, since blunders in alignment estimations, blemishes in sensor get together, and per-unit varieties got from the assembling procedures may present factors that stray from the perfect sensor geometry. For instance, the ebb and flow of the 360° camera probably won’t be uniform over its surface. In this manner, to be hearty enough for such errors, the geometrically adjusted information preferably should experience another degree of change. This is practiced in the following phase of the system by using the spatial relationships that exist in picture information[8].

Another difficult that emerges while combining information from various sources is the distinction in information goal. For the case tended to in this paper, the goal of LiDAR yield is impressively lower than the pictures from the camera. In this way, the following phase of the information combination calculation is intended to coordinate the goals of LiDAR information and imaging information through a versatile scaling activity[9].

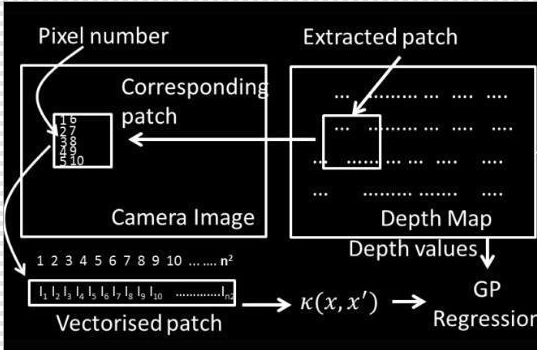

Resolution Matching Based on Gaussian Process Regression

In this segment we depict the proposed component to coordinate the goals of LiDAR information and the imaging information. Through geometric arrangement, we coordinated the LiDAR information focuses with the comparing pixels in the picture. Notwithstanding, the picture goal is far more noteworthy than the LiDAR yield. The target of this progression is to locate a fitting separation esteem for the picture pixels for which there is no comparing separation esteem. Besides, another prerequisite of this stage is to make up for inconsistencies or mistakes in the geometric arrangement step[10].

We plan this issue as a relapse based missing worth expectation, where the connection between the deliberate information focuses (accessible separation esteems) is used to add the missing qualities. For this reason we utilize Gaussian Process Regression (GPR), which is a non-straight relapse strategy. GPR permits to characterize the covariance of the information in any appropriate manner.

Experiment (comparing the method with others)

GP Based Resolution Matching Framework

For execution assessments that continue, we use three picture fragments that are to be melded. Using the melded yield, which is a separation map as appeared in Figure, the free space focuses are recognized. The coherent cover that speaks to free space focuses is alluded to as the “free space veil”. The free space veil is then contrasted with the ground truth cover. The ground truth for the three picture fragments are physically stamped[6s]. Correspondingly, to gauge the exhibition of various procedures talked about in the procedure areas, a free space location cover is gotten and thought about against the ground truth. The quantity of pixels that don’t coordinate the ground truth is acquired by a straightforward ‘xor’ activity of the covers. The extent of pixels that coordinate the ground truth, which is alluded to as “Exactness” is utilized as the essential proportion of execution. Besides, the accuracy and genuine positive rate is likewise determined as proportions of execution. These measurements are summed up in Table 1. We think about the exhibition of GP relapse strategy with two different techniques: tensor factorization for fragmented information and strong smoothing dependent on prudent cosine change. The outcomes are analyzed dependent on the presentation of FSD calculation, and are summed up in Table 1. As showed in Table 1, the proposed GP relapse based methodology perform reliably well.

Algorithms comparison

Discussion and Limitation

Multimodal information gives the chance to use the decent variety offered by heterogenous physical sensors to conquer the constraints of individual sensors[7]. The above areas showed that information combination will prompt increasingly hearty acknowledgment calculations. The difficulties of information combination go past outward adjustment. In the above segments we talked about how to beat goal confuses in heterogenous information sources. Moreover, various information sources have various vulnerabilities related with them. We exhibited how vulnerabilities related with various information sources can be represented, to utilize combined information for acknowledgment errands.

Conclusion and future work

With every innovation having its own preferences and inconveniences, driver-less vehicles are probably not going to depend on only one framework to see and explore the world. Numerous organizations, trust in adjusting the most fitting answer for the clients’ particular needs. We additionally imagine that sensor combination, utilizing a mix of numerous sensor innovations to wipe out the shortcomings of any one sensor type, will demonstrate to the most ideal route forward.

References

1. Dimitrievski, M., Veelaert, P. and Philips, W., 2019. Behavioral Pedestrian Tracking Using a Camera and LiDAR Sensors on a Moving Vehicle. Sensors, 19(2), p.391.

2. Caltagirone, L., Bellone, M., Svensson, L. and Wahde, M., 2019. LIDAR–camera fusion for road detection using fully convolutional neural networks. Robotics and Autonomous Systems, 111, pp.125-131.

3. Optical Engineering, 2019. Ultra-low-light-level digital still camera for autonomous underwater vehicle. 58(01), p.1.

4. Journal of Shellfish Research, 2013. A Camera-Based Autonomous Underwater Vehicle Sampling Approach to Quantify Scallop Abundance. 32(3), p.725.

5. Garratt, M., Francis, S. and Anavatti, S., 2018. Real-time path planning module for autonomous vehicles in cluttered environment using a 3D camera. International Journal of Vehicle Autonomous Systems, 14(1), p.40.

6. Wang, H., Wang, B., Liu, B., Meng, X. and Yang, G., 2017. Pedestrian recognition and tracking using 3D LiDAR for autonomous vehicle. Robotics and Autonomous Systems, 88, pp.71-78.

7. Javanmardi, E., Gu, Y., Javanmardi, M. and Kamijo, S., 2019. Autonomous vehicle self-localization based on abstract map and multi-channel LiDAR in urban area. IATSS Research, 43(1), pp.1-13.

8. Rao, D., 2020. Autonomous Obstacle Avoidance Vehicle using LIDAR and an Embedded System. International Journal for Research in Applied Science and Engineering Technology, 8(6), pp.25-31.

9. Meng, X., Wang, H. and Liu, B., 2017. A Robust Vehicle Localization Approach Based on GNSS/IMU/DMI/LiDAR Sensor Fusion for Autonomous Vehicles. Sensors, 17(9), p.2140.

10. Physics Today, 2013. Lidar, camera, action!.