Overall Goals / Research Hypothesis

In this project to analysis the given dataset which contains the movie name, year, imdb, metascore and number of imdb data. We have used to analysis the movie ratings dataset using python code. First step to prepare the dataset and training the dataset. To use the training dataset for predict the dataset and that plot the graph for that dataset. First step to upload the dataset on python and run the code. To prepare the data and eliminate the duplicate data. To using the prepare dataset for training the dataset using python code and predict the data. To plot the graph for prediction dataset and analyses the dataset. To specific, clear and predictive statement for research hypothesis that about the possible outcome of a scientific research. In hypothesis is described the much more specific and that defined as an educated guess about the relationship between two or more variables. In original hypothesis classifying the given dataset and did result in some success, the result and discuss the result. First import the dataset and find the hypothesis result.

Summary

The main implementation on the project to be used on the data science approach they can used for the python evaluation. The data science on the programming language they can used for two different stages to be implemented that are includes the R code and python to be learned on the very simple and basic idea of the implementation which is displaying on the result on the ideals stages. The excel sheet data the can importing on the data Library which is used on the analysing on the data can identified the pre-processing on the pandas and python implementation on the time series prediction. The using on the equivalent scientific data technique they can implement on the SciPy and NumPy analysis to be identified. Python is great for data manipulation and continuous tasks, while R is good for analysing temporal analysis and datasets (Allen, Campbell & Hu, 2015). The lot of developer they can used for the python implementation to be importing the data and to finding the result on the backend web development artificial intelligence scientific computing on the result to be verified on the data analysis to be displayed on the result which is correct analysis and easy to build on the tools, games at the same to learned on the beginners it is very useful after that experts.

Features Selection / Engineering

Since we are going with a text analysis model, we should try to extract features from words. It can provide some different features such as TF-IDF, Sentiment, Count Vectors, Word Embedding and Header Models. After we have accomplished this step, we now have data that we can work on to extract some features. Feature selection is the process of automatically or manually selecting the features that contribute most to your prediction variable or output (Bai, Lu & Yang, 2018). Having inappropriate highlights in your information can diminish the exactness of models and cause your model to learn dependent on improper highlights. Selecting strategies are normally utilized as a pre-preparing step. The selection of highlights is free of any AI calculation. Rather, highlights are chosen based on their scores in different measurable tests for their relationship with the result variable. The relationship is an emotional term here. Highlight Selection. Highlight choice is for separating immaterial or repetitive highlights from your data set. The key distinction between highlight determination and extraction is that component choice keeps a subset of the first highlights while include extraction makes fresh out of the brand new ones.

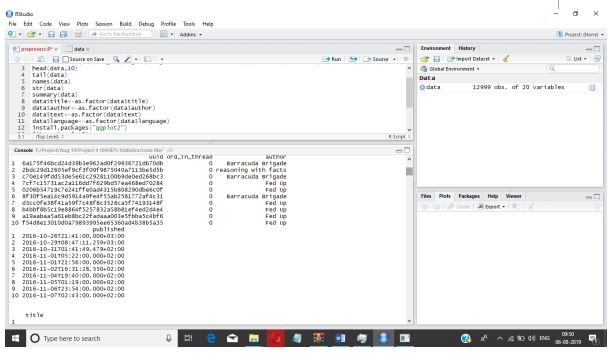

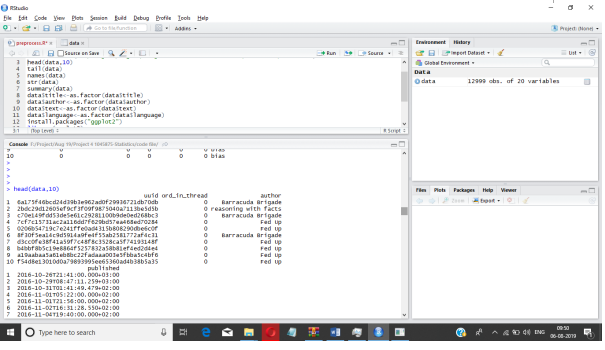

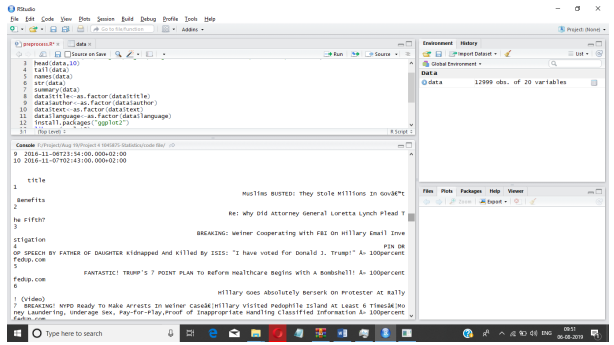

To import the dataset and define the dataset using the python. It shows the dataset head and data count also.

It shows the plot of the metascore of given dataset.

Training Method(s)

To import the movie ratings dataset and training the dataset using training methods. The machine learning used to training methods and that extension language for applications. To install the packages for training methods and plot the graph for that dataset. We used the movie rating dataset for implement and analysis the dataset. It contains the movie name, year, score and votes. To prepare the data and analysis the data. To Compare the dataset and plot the graph between metascore and imdb data (Fan, Liang, Chen & Yan, 2019). To compare each dataset and plot that data graph. Artificial intelligence and machine learning includes PC to get prepared utilizing a given informational collection, and utilize this preparation to anticipate the properties of a given new information. Preparing strategies were kept the equivalent between both of our speculation, ordering the tweet itself and arranging the record. Since we end up with a comparable information structure, a TF-IDF vector, we can just bolster that into the train_test_split work and get our qualities to sustain into the classifiers. Some portion of the issue here moved toward becoming getting a dataset that contained enough of the two focuses on that the classifier would give a decent return. Since the objective of the task was to perform NLP, the selection of classifiers tried to stick around things that would function classification with that. Naive Bayes, Linear Support Vector Machine, and Logistic Regression were altogether focused to endeavor to characterize our model. While those function admirably in their very own accords, group was additionally endeavoured with a capacity set to enable through loads on it to see which would give us the most astounding achievement rate. The three classifiers utilized in the gathering technique was Logistic Regression, Naïve Bayes, and Random Forest Classifier (Hill & Pitt, 2016). Another troupe strategy that was utilized was the Random Forest Classifier aside from with a bigger estimators than what was utilized for the averaging/weighting group. The testing dataset was kept at 30% preparing and after that 70% was left for testing with the arbitrary state set.

To import the movie dataset and that shows the training dataset from given dataset.

Interesting findings

We will utilize measurable techniques to appraise the precision of the models that we make on concealed information. We likewise need a progressively accuracy of the precision of the best model on implementation of the evaluation information by assessing it on real concealed information (Hurn, 2011). The analysing on the data to be predicts and using the algorithm That is, we are going to keep down certain information that the calculations won’t get the independent idea and accuracy to see and we will utilize this information to get a second and free thought of how precise the best model may really be.

The data to be split on the two different values that are 80% they can performed on the training data and 20 % to be performed on the validation on the processing dataset. The main process to be implementation on the machine learning algorithm to going to be used on the predicts the limitation analysis on the values to be directed configured and use of learning algorithm to be finding the dataset on the knowledge based analysis to predicts the periods of time. . Today, begin by getting settled with the stage. It is a decent blend of straightforward direct (LR and LDA), direct (KNN, CART, NP and SVM) calculations. We re-establish irregular number seeds before each and preforming the data to be ensure that the estimation of every calculation is performed utilizing indistinguishable information parts. This guarantees the outcomes are straightforwardly similar.

Simple Features and Methods

The training data contains the target or target attribute that using the algorithm for implement the models (“Training the kit generation”, 2011). The process of training data involves the providing algorithm with training data. The training process created by model and analysis the data. The algorithm are used to the training data that map the input data attributes to the target data and that captures these patterns. To plot the graph for given dataset and analysis the dataset. The feature process of the simple implementation methods they can used for the TF-IDF and NLP parts of the method implementation. We can anyway exchange off on various strategies to process the information itself like utilizing distinctive stemming techniques or letting in certain numbers. The analysing on the TF-IDF method to be identified the performance on the data pre-processing of the command line they have been used on the HDFS on the python panda’s implementation. The pre-processing implementation on the data to be performed and display the result and once the data to be stored on the training on original hypothesis after that to be first implementing on the test on the hypothesis to be predicts the values on the first file.

Model Execution Time

The execution of the minimal model they can predicts the dataset on used to the 24 hours accounting based approaches on identified the performance on the dataset pre-processing services (Wegman, 2012). To go around this HDF format, the processed dataset was used to send a file, so that it only had to be done once. The model of the execution time they can used for the initially the analysing on the entire dataset format after that they can used for the pre-processing format to use on convert to statistical analysis structure and secondly they can used for the finding on assemble on the average weighting they can used for classification methods on the Linear and naïve Bayes regression methods, they can performed which is predicts and supporting on Random Forest classifier, average weight random assembling, and vector machine implementation they can displaying on the result of hypothesis to be successfully implementing which provides the original and added value hypothesis test.

References

Allen, G., Campbell, F., & Hu, Y. (2015). Comments on “visualizing statistical models”: Visualizing modern statistical methods for Big Data. Statistical Analysis And Data Mining: The ASA Data Science Journal, 8(4), 226-228. doi: 10.1002/sam.11272

Bai, T., Lu, H., & Yang, J. (2018). Maintaining temporal validity of real-time data on non-continuously executing resources. MATEC Web Of Conferences, 160, 07008. doi: 10.1051/matecconf/201816007008

Fan, Y., Liang, Q., Chen, Y., & Yan, X. (2019). Executing time and cost-aware task scheduling in hybrid cloud using a modified DE algorithm. International Journal Of Computational Science And Engineering, 18(3), 217. doi: 10.1504/ijcse.2019.098532

Hill, H., & Pitt, J. (2016). Statistical Analysis of Numerical Preclinical Radiobiological Data. Scienceopen Research. doi: 10.14293/s2199-1006.1.sor-stat.afhtwc.v1

Hurn, B. (2011). Simulation training methods to develop cultural awareness. Industrial And Commercial Training, 43(4), 199-205. doi: 10.1108/00197851111137816

Training the kit generation. (2011). Nature Methods, 8(12), 983-983. doi: 10.1038/nmeth.1804

Wegman, E. (2012). Special issue of statistical analysis and data mining. Statistical Analysis And Data Mining, 5(3), 177-177. doi: 10.1002/sam.11151