Summary:

1.1 Overview of the System:

In this context eStage application has been utilised for managing online backstage while application’s design has been created in such a way that it will be able to select the roles of each of the stakeholders that is connected with several of backstage management. The system user must login to the information system by selecting some specific types of roles while the authentication test also need to be passed for accessing various of features of the system. Different of modified welcome page is presented to each of the user of the system when they login to the system positively. Important accessibility that provided to the users are the home, logoff, discipline and competitors. User are able to access the details through selecting some proper options and they can choose the system more wisely. Competitors can provide new registration to the information system. Competitors can be moved by the stage managers and they can also withdraw or change the competitor sequence. Judgement and the results both can be recorded within the information system by providing input to the system which consists data regarding scores and results for the information management with automatic result distribution.

1.2 Test Purpose:

- Business requirement analysis and critical testing of transaction.

- For the satisfaction of project requirement and information for perfect adaptability of the developed software.

- Preparing a testing schedule for development of the proper working testing plan which is important for quality product.

1.3 Test Objective:

The main objective of the testing is ensuring that project successfully meets the project requirement. This test document will also guide the testing team for meeting the requirement of the project. This document will evaluate the quality of the final build of the system and will also ensure that there is a minimum number of errors within the system.

2. Project Scope:

This is a software testing project which is aiming to develop an online backstage management system without any types of errors. For the development purpose system integration, unit testing, system testing and user acceptance testing will be done. The total time required for completion of the project is around one month. Following functionality will be tested through the software which are the:

- Different of nodes controlling.

- User profile updating.

- Deleting or adding new nodes.

- Admin’s login validation.

- Deleting and adding new user to the system.

Overall performance of the system is totally depending on the hardware and the platform on which it is running. Thus these aspects are out of the scope of the project. Computer clusters are also out of the scope in this project.

3. Testing Plan for the Project:

a. Test Plan Design:

Different kind of approaches need to be evaluated before proceeding with the software testing. The main aim of this evaluation process is selecting best method for the online backstage management system testing. Limitation of the project has been identified through evaluation of scope and objective of the project. The main type of testing which has been performed in this case descried in the below section.

- Integration Testing: The integration testing is important in the sense that it is used for functionality testing of the software that is being developed. To perform the integration testing several of models is used for the software development with the testing integration with some other types of findings for finding compatibility with other types of modules. Various of platforms has been utilised for the evaluation purpose so that it can be ensured that the software is capable of running on different types of platforms and configuration.

- Unit Testing: The unit testing is done for the verification of the codes and the logics that are developed for the current developing software. The unit testing need to performed in various of stages for minimization of all the bugs so that it can be ensured that there is no eros in the final reporting of the developed software.

- User Acceptance Testing: The use acceptance is an important criteria of the software testing. Through this type of testing it is ensured that the developed system will be friendly in nature for the users. To ensure this factor the complexity of the developed software must be minimum and there should not be any kind of difficulty while accessing various of functionality of the developed system. The flow of the information also need to be analysed in this case for assessing any type of errors and these errors should be mitigated in this case.

- Business requirement: To satisfy the business requirements various of test cases must be developed and documentation of the test results should be done for ensuring an increased efficiency. The test cases should be designed by considering that the system must fulfil the requirements of the business for which it is being developed and the error rates of this system should be near to zero. Thus, these test cases must ensure that final version of the developed software should be able to manage the information and the functionality that is required by the system.

All the above discussed tests are done developing a test case. In this context the created test cases are shown in the following table.

| Use Case ID | Description of the ID |

| UC-1 | Login to System |

| UC-2 | Profile Update in System |

| UC-3 | Rebooting the System |

| UC-4 | Deletion or Addition of users |

| UC-5 | Deletion or Addition of node |

| UC-6 | Powering UP |

4. Test Cases:

In the following table test cases are demonstrated.

| Test Case ID | Description of the ID |

| TC-1 | Login to System |

| TC-2 | Profile Update in System |

| TC-3 | Rebooting the System |

| TC-4 | Deletion or Addition of users |

| TC-5 | Deletion or Addition of node |

| TC-6 | Powering UP |

From the above test case it can be clearly seen how development process is increasing its efficiency for the online backstage system deployment.

5. Document

Purpose

The testing of the online backstage management system is performed such that no errors are generated at the runtime of the system and the user can efficiently login and manage the system.

Inputs

The users can select their name from a dropdown list and input their password in the specific filed for login into the system and manage the information from their portal.

Expected Outputs

After selecting the user and inputting the password a validation is used for authorizing the user and redirecting him to the welcome page of the information system. The functionality of the portal is given based on the roles and responsibility of the user. The user is redirected to another link after analysing the user roles and responsibility.

Test Procedure

A test plan is designed by dividing the information system into various components and defining the procedure for testing. A functional and non-functional testing is performed on the system for checking the performance and errors generated by the system. The specific test methodology is created after analysing the different procedures and testing plan. The scalability and performance of the system is also evaluated for in order to identify the constraints and testing the security of the system. An usability testing also done for identifying the usability of the information system and identifying the performance output of the system. After the identification of the fault it is needed to be recorded in the information system and the faults is needed to be fixed such that the system is functional and does not have any quality issues with the final product.

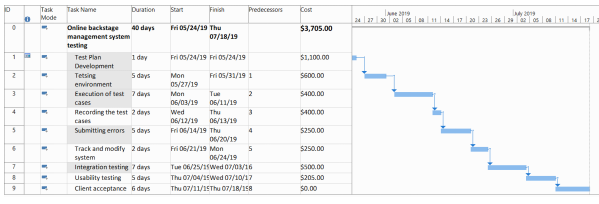

6. Gantt Chart

7. Budget

Minimum lines of codes si = 500

Max. lines of code sn= 2000

Likely lines of codes sm= 1300

Estimated lines of codes = si + sn + 0.67 sm

Kloc = 3371/1000

= 3.371

Estimation of Disproportionate effort

Rating [1 – 5]

Precedencies 2

Flexibility of development 3

Risk resolution/ Architecture 3

Cohesion 2

Maturity Process 3

Total 13

B = 0.13+1.01

= 1.14

Estimation of M (Process, People attributes and multiplier reflecting product)

Cost Drivers Value Rating

Product Attributes

Application database size 1.00 High

Product complexity 1.00 Nominal

Hardware Attributes

Constraint of runtime performance 1.00 High

Memory constraints 1.06 High

Personal Attributes

Experience of application 1.00 Nominal

VM Experience 1.10 Low

Experience of programming

Language 1.00 Nominal

Project Attributes

Software tool usage 1.08 Low

Development schedule 1.00 Nominal

M= 1.00*1.00*1.00*1.06*1.00*1.10*1.00*1.00*1.08

=1.25

Effort estimation

Effort = A*SizeB *M

= 2.4*3.3711.14 *1.25

=12 PM

Time needed for development

TDEV = 3 * (PM) (0.33+0.2 *(B-1.01))

=3.5 Months

Actual Cost

Average Cost

Cost of Hardware = 20,000

Cost of Software = 1,000

Cost of Material = 7,00

Employee Cost = 10,000

Actual Cost = Effort * total cost

= 12 * 31700

= 380400

8. Report

The test is performed with the involvement of the test personnel and thye are needed to be notified for:

- Eliminating the errors from the software product

- Defining testing rules for the personnel

- Perform usability test on the software product

A report is needed to be created and shared with the directors using email and test logs and other information about the software should be embedded i.e.

- Test team contact

- Incident description

- Incident cause and effort

Bibliography

Barr, E. T., Harman, M., McMinn, P., Shahbaz, M., & Yoo, S. (2015). The oracle problem in software testing: A survey. IEEE transactions on software engineering, 41(5), 507-525.

Black, R. (2014). Advanced Software Testing-Vol. 2: Guide to the Istqb Advanced Certification as an Advanced Test Manager. Rocky Nook, Inc..

Briand, L., Nejati, S., Sabetzadeh, M., & Bianculli, D. (2016, May). Testing the untestable: model testing of complex software-intensive systems. In Proceedings of the 38th international conference on software engineering companion(pp. 789-792). ACM.

Deak, A., Stålhane, T., & Sindre, G. (2016). Challenges and strategies for motivating software testing personnel. Information and software Technology, 73, 1-15.

Godefroid, P. (2015). Between Testing and Verification: Software Model Checking via Systematic Testing. In Haifa Verification Conference.

Harman, M., Jia, Y., & Zhang, Y. (2015, April). Achievements, open problems and challenges for search based software testing. In Software Testing, Verification and Validation (ICST), 2015 IEEE 8th International Conference on (pp. 1-12). IEEE.

Kempka, J., McMinn, P., & Sudholt, D. (2015). Design and analysis of different alternating variable searches for search-based software testing. Theoretical Computer Science, 605, 1-20.

Long, T. (2015, July). Collaborative testing across shared software components (doctoral symposium). In Proceedings of the 2015 International Symposium on Software Testing and Analysis (pp. 436-439). ACM.

Luo, Q., Poshyvanyk, D., Nair, A., & Grechanik, M. (2016, May). FOREPOST: a tool for detecting performance problems with feedback-driven learning software testing. In Proceedings of the 38th International Conference on Software Engineering Companion (pp. 593-596). ACM.

Mäntylä, M. V., Adams, B., Khomh, F., Engström, E., & Petersen, K. (2015). On rapid releases and software testing: a case study and a semi-systematic literature review. Empirical Software Engineering, 20(5), 1384-1425.

Marculescu, B., Feldt, R., Torkar, R., & Poulding, S. (2015). An initial industrial evaluation of interactive search-based testing for embedded software. Applied Soft Computing, 29, 26-39.

Orso, A., & Rothermel, G. (2014, May). Software testing: a research travelogue (2000–2014). In Proceedings of the on Future of Software Engineering (pp. 117-132). ACM.