Introduction

Communication refers to exchange of information and ideas among various people. It is considered as the essential tool for increasing the productivity and maintaining the strong and long lasting working relationships (Markovic, Mirjana and Aidin 2018, 11). The additional paragraphs of the report will reflect upon the comparison between various models of communication.

Harold Lasswell linear communication model vs. Action model

The Harold Lasswell linear communication model was developed in the year 1948. This model is considered as the most influential because it helps in analysing the whole communication process and is considered as linear and unidirectional. It addresses the four W which includes who will communicate the message, what will be communicated, how it will be communicated, to whom it will be communicated and with what effect. This model is simple and is valid for all types of communication (Zahra, and Mahshid 2017, 4). The limitations of the model that is does not includes feedback and the does not mention the noise in the communication process. This model is also known as the action model which is also used for the interpersonal communication between the groups or individual. This model also consists of the sender encoding the message and channelizes it to the receiver in the presence of noise. It is just another name of Harold Lasswell model (Denis and Sven 2015).

Harold Lasswell model vs. Interactive model

The interactive model of communication focuses on exchange of information from the sender to the receiver and from the receiver to sender back again while the Harold Lasswell model in single way communication model that does not allows the receiver to speak. The main role of the receiver under Harold Lasswell model is to listen (Dean C 2017, 47-57).

Under this model both the sources are able receive messages and feedbacks form each other while Harold Lasswell model does not takes feedback from the receiver of the message. The interactive model has the concept of noise and considers the barriers in communication while the Harold Lasswell model assumes that there is clear cut beginning and the end of the communication. This model was mainly used for mass communication while the interactive communication model is developed for interpersonal communication. The effectiveness of the communication is analysed by the common field of experience of both the sender and the receiver (Dean C 2017, 47-57).

The interactive communication model provides the opportunity to both the parties to give their opinions and is very dynamic and ever changing model. This model increases the risk of misunderstandings and wrong interpretation of messages while the involvement of multiple sources creates difficulty in communication of message (Sharma 2018).

Harold Lasswell model vs. Transactional model

In the transactional communication model, the sender and receivers are considered as communicators. Under this model both the communicators are considered interdependent on each other and are equally important while under Harold Lasswell model the sender is not dependent upon receiver and vice-versa. Under transactional model the reactions of each person are dependent upon the factors such as cultural beliefs, prior experiences and self-esteem while the Harold Lasswell model does not considers any factors which may create noise in the process of communication. In the transactional model simultaneous feedback is received while Harold Lasswell model does not includes feedback in the communication process. The transactional model considers the role of the environment and the context while Harold Lasswell model is not concerned about the environment. The transactional model considers communication as an ongoing process and contains the ellipses that lie in the external environment. It connects the people through communication and all the elements under this model are interrelated with each other (Sharma 2018).

In contrary to the above model, Harold Lasswell model does provide the equal opportunity to both the parties and the elements in the communication process are independent. Therefore the effectiveness of the transactional model is more in comparison to Harold Lasswell model because it provides clarity in the content and the role of each element is dependent upon reaction of the other (Denis and Sven 2015).

Under Harold Lasswell model the noise is not considered while the opportunity of noise is more in case of transactional model because the communication is done simultaneously. This model demands more of verbal communication for reduction of misunderstandings in the interpretation of the message (Denis and Sven 2015).

Conclusion

From the above discussion it is critical to note that Harold Lasswell model focuses on mass communication while the other two models emphasise on interpersonal communication. Harold Lasswell model does not consider feedback while the interactive and the transactional model stress upon the context and the feedback from the both the parties involved in communication.

References

Abazari, Zahra, and Mahshid Borjian Brojeni. “The Role of Harold Laswell Communication Theory in Librarianship and Information Science.” International Academics Journal of Humanities, 2017. 4.

Ashish, Sharma. Introduction to mass communications. Evincepub Publishing, 2018.

Barnlund, Dean C. “A transactional model of communication.” Communication theory. Routledge, 2017. 47-57.

Markovic, Mirjana Radovic, and Aidin Salamzadeh. “The Importance of Communication In Business Management.” Business Management, Entrepreneurship And Entrepreneurial Tendencies, 2018.11.

McQuail, Denis, and Sven Windahl. Communication models for the study of mass communications. Routledge, 2015.

Research Methods

Facial recognition algorithm.

This is an approach in technology field that deals with learning and recognition of face and identification of face from an image sent to the system. This is mostly applied in security measures (Naik, M.K. and Panda, R., 2016).

There are numerous method of identifying a face. The algorithm can use data to get a configuration which characterizes a particular individual.

Operation modes of face recognition systems.

- Verification/ authentication of facial images. It matches the test facial image with that saved in the system to grant permission.

- Identification. It compares the test facial image with the one stored in the system with the goal of discovering the user that matches that face.

Stated bellow are several common categories of face recognition algorithms.

Fisherface algorithm.

This is a type of algorithm that is used for face recognition. It is among the most common algorithms used for this purpose. This algorithm is believed to stand out among the other techniques such as eigenface, a superiority brought about by the fact that it employs more determination to exploit the difference between periods in the training (to recognize success of the system created) process. The process of face recognition using this algorithm is centered on reduction of space dimension by use of a technique known as Principal Component Analysis (PCA). Afterwards, Linear Discriminant Analysis (LDA) method is engaged to acquire feature of image characteristics. This type of face recognition is designed and developed to identify the face image by corresponding the outcomes of trait extraction (Gangopadhyay, I., Chatterjee, A. and Das, I., 2019. The system is then obligated to find out if the face image under test is identified appropriately or otherwise. During processing of images, the algorithm is employed to produce trait vector of facial image data and then compare vector of characteristics of the exercising image using vector features of an image under test using formula known as Euclidean distance. This algorithm is capable of identifying the face testing image of acceptably with unity which is one hundred percent.

Eigenface algorithm.

This is another method of face recognition algorithm that is being used with the sole purpose of recognizing each individual different from each other. In this algorithm, during the recognition process, an eigenface is made for the face image. Euclidian distances of this eigenface compared to that which is previously stored in the system are calculated.in analyzing the information from Euclidian distances, the eigenface with the least Euclidian distance is the one that has close resemblance to the person (Wagh, P., Thakare, R., Chaudhari, J. and Patil, S., 2015). The results are simulated mostly using Matlab program.

Local Binary Pattern Histogram (LBPH).

This algorithm is among the earliest face recognition known to man. Local binary pattern can be described as an effective quality operator that tags the pixels of an image by thresholding the locality of each pixel and reflects the output in form of a binary number. The images are characterized with simple data vector when associated with use of histograms. This algorithm appreciates the following constraints, radius for the middle pixel which is commonly set as one, neighbors normally set to eight, grid Y and grid X which are both set to eight. In conclusion to be able to identify the image which corresponds with the test image, a comparison is made between two histograms. An image that has the closest histogram is identified as a likely image. The comparison is based on the distances between the histograms that can be calculated by, chi-square and absolute value among many other methods (Acharya, U.R., Ng & W.L., Rahmat, K., Sudarshan., 2017).

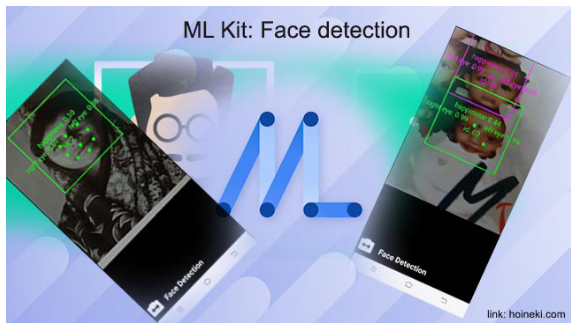

All this methods are aimed at the same goal of face recognition. However, each algorithm has a different approach from each other. Below is a comparison between the above sated algorithms. The following are images of the apps that use LBPH algorithm.

Fig. 1.0: face recognition App

Fig. 1.1: Face recognition App ready for scanning

Fig 1.2: Face recognition App

Fig. 1.3: Face recognition App on phone

Fig 1.4: Face recognition App

Fig. 1.5 Face App installed

Fig. 1.6: fully installed on phones

Strong points.

| Eigenface algorithm | Fisherface algorithm | Local binary pattern histogram. |

| In this type of algorithm, no acquaintance of geometry as well as reflectance of faces is necessary. | As compared to eigenface algorithm, this type of algorithm has marked improvement in classification of images. | There is use of very high discriminative supremacy. |

| The principal of low dimensional subspace representation is employed to achieve appropriate data density. | This approach has an advantage of being extra invariant to light intensity. | There is simplified methods of computing for the histogram distances of different images. |

| Face recognition is simplified and very effective in relation to other types of algorithms. | There is assurance of improved accuracy in facial expression as compared to other approaches example eigenface approach. | This is considered one of the easiest face recognition algorithms to use because there is demonstration of local features in the image. |

| There is direct use of raw statistics for face recognition and learning without any substantial mid-level processing. | The approach is not affected byNoise included images as well as the blurring effect on the image. | There is assurance of decent performance in face recognition. |

| It is easy and quick to implement | The approach is easy and simple to learn. | The algorithm does not change with the variation of greyscale. |

| There are straightforward stages of face recognition. |

Weaknesses.

| Eigenface algorithm | Fisherface algorithm | Local binary pattern histogram. |

| This type of algorithm is considered to be extremely sensitive to scale therefore for the purposes of scale standardization, there is a necessity of mid-level processing. | This algorithm is more intricate than eigenface in locating the projection of face space. | The algorithm is subject to variation in rotation of images to be recognized. |

| The method is very sensitive to location and lighting of head. | The processes involved in fisherface outcomes to large storage space for the image under test and also more processing time is needed for this method. | Increase of the number of neighbors leads to increase of the size of the feature which results to an increase of computational density in relation to time and space. |

| Recognition rate is subject to variation of pose and illumination. The rate decreases under different conditions of both pose and illumination. | First time learning is very time consuming. | There is a constraint of operational data taken by the algorithm since only pixel difference is used, disregarding the size of information. |

| The method may need even background of images which may not be fulfilled in most natural scenes. | The approach does not easily appreciate changes in face arrangement and facial expression. | |

| This method can be categorized as a method that is very time consuming when it comes to learning it. This limits the update of face database. |

Below is an illustration of local binary pattern histogram face identification process.

References

Naik, M.K. and Panda, R., 2016. A novel adaptive cuckoo search algorithm for intrinsic discriminant analysis-based face recognition. Applied Soft Computing, 38, pp.661-675.

Gangopadhyay, I., Chatterjee, A. and Das, I., 2019. Face Detection and Expression Recognition Using Haar Cascade Classifier and Fisherface Algorithm. In Recent Trends in Signal and Image Processing (pp. 1-11). Springer, Singapore.

Wagh, P., Thakare, R., Chaudhari, J. and Patil, S., 2015, October. Attendance system based on face recognition using eigen face and PCA algorithms. In 2015 International Conference on Green Computing and Internet of Things (ICGCIoT) (pp. 303-308). IEEE.

Acharya, U.R., Ng, W.L., Rahmat, K., Sudarshan, V.K., Koh, J.E., Tan, J.H., Hagiwara, Y., Gertych, A., Fadzli, F., Yeong, C.H. and Ng, K.H., 2017. Shear wave elastography for characterization of breast lesions: Shearlet transform and local binary pattern histogram techniques. Computers in biology and medicine, 91, pp.13-20.

Introduction

User experience is the overall experience of a person using a product such as a website or a computer application, especially in terms of how easy or pleasing it is to use. User experience focuses on how products work on the outside without considering how the products work on the inside. It deals with how the user interacts with a product without considering the functionalities of that product. User experience has been recently used to create products that give a user a pleasant experience. When users have a bad experience with your product, they may consider not ever to use it again.

This report explains the process and steps taken to improve the user interface of onlineAuct website using the Jesse Garrett five planes of the elements of user experience. We will start with the bottom planes going to the top planes. The five planes are surface plane, skeleton plane, structure plane, scope plane and strategy plane (Jesse, 2011).

Perform, Conduct, Evidence and Document Strategy Plane Research

Before we jump to design or start developing the website, we need first to identify what the owner really what to get from the site and the expectations of the users from the site.

Goals and objective of the system to the organization

The first step of coming up with a great user experience design is identifying the purposes of the intended product to the organization itself. When identifying the objectives, they need to be specific and accurate and avoid generalizing the objectives (Jesse, 2011). Below are the major goals/objectives of the onlineAuct website.

- To increase the number of a user using the site.

- To increase the revenue generated by the website.

The Users expectations from the site

Identifying user’s expectations is the first step in building a successful product. The first step in identifying user expectations is researching to identify who our users are and what they need (Jesse, 2011). Once we identify our audience, we can take a step further and research with them of what they want from the site.

After identifying a bunch of users, we divided the users according to their characteristics and needs (Jesse, 2011). This was helpful as it allows the designers to get different views from the different audience and to understand the user needs better.

Conducting interviews

We first did our research from several users to get their feedback and how they would like to interact with the system. We conducted personal interviews to understand customer views and to get to know which problem the user wants us to solve. Interviewing the stakeholders has helped me identify the right audience for the product and characteristics of study participants. As with every product or service, the best offering comes from carefully identifying the target audience, their needs and their wants (Anton, 2017).

Firstly we started by analyzing the goal for our business and the kind of customers that use our services to check if it will be viable to build the system. We then collected as much data as we can from our targeted user to gather the needs of those who will use our product (Anton, 2017).

Our interview questions were based on the previous website. We tried to figure out what was not right about the site as well the best parts of the website. We gathered enough information regarding the previous website, both positive and negative.

The table below represents the interviews questions the user were asked.

| 1 | What is your attitude toward the website when purchasing items? |

| 2 | How much time do you spend in the web every week? |

| 3 | How easy is it to use our website? |

| 4 | How many times have you successfully purchased an item through our site? |

| 5 | What are the challenges you encounter when trying to bid for a product? |

| 6 | When the process fails, what other brands do you turn to? |

| 7 | What feature makes you like the brand? |

| 8 | What feature would you like us to add to our system? |

First question intends to understand the attitudes of a user when regarding online payments. The most user responded negatively towards the problem. Eighty per cent of them do not have trust with online payments.

The second question wanted to measure the time our users spend on technology. Does our user love technology? Do they devote their time to technology? This will help us know if we are dealing with the right type of users.

The previous website is a failure for the company. We want to know if the user found it easy to use the site. Different users had different perceptions regarding the old website. Next, we measure success rate for the old site by asking the user the successful bids there have made from the site. If they have not yet made any binds, it is good to know what challenge they have been facing.

The last questions target our competitors. We need to get details of what our customers does and how they do it. What makes there site successful, if we can understand their strength and the weakness we can use them to come up with a distinct product.

After conducting the interviews, we were able to select two personas based on their characteristics and needs. The personas represent the audiences that use the site.

User personas

Challenges with the old system

- Took much time to load content.

- The payment system was not working as expected.

- Poor organization of the system

Motivations

- To spend less time in binding for products.

- A simple website that is easy to use.

- Let it be clear attractive and easy to use

- A well secured payment system.

Each persona had his/her different view on how the system should be implemented. We decided to come up with an empathy map. The empathy map will help in focusing on what the end user feel and the way they think about the site. The challenges they face when using the website and finally how they may want to use the website.

Finally, we had to set the success metrics to track the progress of the system. We used the key performance indicators to track if the website is meeting the objectives set. We set efficiency to be our key performance indicator. We wanted to make sure the website is efficient for the audience and they can rely on it. Our key performance indicators measures was;

- Ease of accessing the system on both devices without feeling any difficulties

- The time it took the web pages to load.

- The ease of making payments using the website.

UX Principals and Data to Scope Plane Outline

In this section, we tried to identify the requirements and the features the website will have from the response we got from the audience. According to (Jesse, 2011) there are two reasons why we bothered in defining the requirements.

- To know what we are building and,

- To know what we are not building.

The two reasons may appear to be the same in meaning, but knowing what you are supposed to build will make people understand the objectives and the goals of the project and the expected due date of the project. Knowing what you are not supposed to build will help in planning the features to be included in future.

In order to come up with the requirements of the website, we had to list the functionalities the website is expected to have. At the same time, we had to identify the content that the website is supposed to contain. The content of the site can appear in different format; images, audios, videos and text. The formats can be combined together to create a meaningful message to the audience.

Before we define the requirements and the content the site will have, we first had to come up with an empathy map for our users.

Creating an empathy map.

We used the empathy map in order to understand the user mindset. Empathy map also helps the team members in establishing a common goal of what the user needs.

According to (Sarah, 2017) the empathy map is divided into four quadrants area.

- What the user says about the product.

- How they thinks about the product.

- How they feels the product and

- What they does with the product.

We used our two persona to create an empath map.

This is what the users says

- Am expecting different website.

- The website need to be reliable

- I need a secure system

This is what the users thinks

- The website is wasting lot of time

- It is a good idea to come up with a new website.

- The website need to solve the current problem

This how the users feels

- The user is overwhelmed

- The user is excited.

- Disappointed with the old website.

- Fears the website may not provide the solution

What the user does with the old website

- Open the website.

- Login to the website

- Compare the website with other websites

- Do research

Creating user Scenarios

“A scenario is a short, simple narrative describing how a persona might go about trying to fulfill one of those user needs. By imagining the process our users might go through, we can come up with potential requirements to help meet their needs” (Jesse, 2011). A scenario focus more on how user interact with a product.

User scenarios will help in identifying how the user achieve their goals using the website. First, we identified a goal for the user. The goal was to bid for a product for new users. We tried to articulate the process the user will go through while trying to achieve this goal.

The first step is to register to the site by providing his/her personal information.

Secondly, he/she will use the email/username and password to login to the site. After successfully logging in to the system, he will be redirected to the home page. The home page will have the company information and sample products that the company offers and search form to help the user search for a product.

He will use the search form to search the product he want to bind.

After finding the product, he will click on the product image where he will be redirected to another page. From this page, the user can bid for the product by adding the bid price.

Now we have the steps that our users can follow, next step was to check for inspirations from the competitors. This is because we all working with the same products and we are aiming at the same goal. We did this to check if the competitors had an effective feature that they have use to meet these objectives (Jesse, 2011).

After analyzing the user’s scenarios and looking for inspirations from the competitors. We had to meet the stakeholders to get ideas of the requirements that the site will need. We worked close with our users to get ideas from their side. Finally, we collected all the requirements and grouped them into three categories.

- What people say they want

- What people say they want but its not actually what they want.

- Finally, what people don’t know they want

Functional specifications

When writing the functional specification we tried to be specific by giving a little bit of description. We also tried to be positive, instead of describing bad things, we gave the explanations that can solve those bad things.

Below are the functional specifications.

- New user will have to register to the system.

- User will use their email and password to login to the system.

- User must login to make a bid.

- User will get notified using their email when their bid go through

- User will be allowed to make payment for bid that have been accepted

- User will filter product search using search form.

Prioritizing functional specification

After listing all the functional the site should have, we had to prioritize the most critical features from the list critical features. The process will have great impact as it will allows us to put more efforts on features that are more critical to the site.

Content requirements

Finally, in this scope we had to decide the content to be used in the site. Content has a great influence in the field of user design. Content will give the user the directions on how to interact with the website. Text, images , videos and pdf files are the main content in the site.

The text is used to give a formal description about the company and to describe the products and items in the site. Images will act as placeholders for the products and videos will give some description on how to use the website.

The structure plane

We used interaction design and information architecture to understand how the users thinks and behave. This helped us in building a successful experience to the users. In interaction design we were concerned with how the system will respond from the user behaviors and how they will accommodate those behaviors. Information architecture is concerned with how the content is organized to facilitate a better understanding (Jesse, 2011).

In interaction design we had to define the actions will take and how the website will respond to those actions. The action include how the website will look like, and at which point will the website load when user search for the site online.

The first idea was to have a landing page. The page was to contain the login form and the signup button. The button when clicked was supposed to open the sign up form. Later we decided the home page will be the first page to be loaded when user visit the site. The home page content described the purpose, goals and the visions of the company. It will be good to include the contact details on top of the website and social icons on the bottom section of the website.

DESIGNING THE ARCHITECTURE

The architecture of the website will have three interface. The first interface is the user interface. This is where users will interact with the system.

The admin interface will act as the panel of the system.

Database interface – this is the store of the website.

We used the principal of similarity to group the services. The major services that are frequently used by the user were places on the left side contained in a large box each of the same size to indicate similarity of the services.

Principal of proximity say that element that are too closer to each other are likely to be related than the element far away from each other. We used this principal to show related elements, the elements are separated by a white space to give them the breathing space and separate them from other elements.

Sitemap

LOW FIDELITY DEIGN OF THE SYSTEM

After learning about user needs and developing an information architecture, we built the base of our UX strategy. Now, we were able to proceed with Low-Fidelity flow development.

Low Fidelity design are created to understand the right sequence of the main user scenarios, It helps to design and adapt actions that users have to make in order to achieve their goals. We used paper and sticky notes to draw down the low fidelity design

WIREFRAMES

Wireframes are blue print that defines the structure of a web page, the functionality and how the content will be organized. Wireframes are designed using dark and white color without the image to reduce distracting the user. Below are the wireframes for the system. The wireframe will include the important elements of the webpage. They include the Logo, Navigation, Body content, user login buttons, search function, and footer.

Home page wireframe

The wireframe represent the structure of the home page. The home page will have a logo on the left top side while the navigation will be on the right side of the logo. Wireframe make use of dummy text and icons to represent the content of the webpage.

Computer Science

Project discussion

The main aim of this project to be implementing on the syntactic analyzer on the existing grammar implementation on windows 10/Cygwin environment using c programming language. The analysing on eliminate grammar they can implementing on the nonterminal denoted as the red symbols, terminals they can denoted as the blue symbols, black punctuation is denoted as EBNF symbols on the variable list of function they can executed (Park, 2017). The function symbol they can used for the different expression, operators, and binary operators, on the type value they can denoted as the integer, real, Boolean operation on the variable parameters. The parser syntactic analyzer on the variable declaration they can used for detect the error message and then process of recover on the syntax error production on synchronization will be investigated.

Test plan of compiler program

To test our compiler, we should now utilize test programs that contain work calls. Attempt a wide range of calls. Test contentions that are ints, copies, numbers, and articulations. Test capacities with no parameter, one parameter, and a few parameters. Additionally use contentions that are themselves work calls (LEXICAL COLLOCATION ANALYSIS, 2019). We will actualize the administrators in an intelligent request that enables us to test our work as we come. The print and peruse explanations have just been executed to encourage the testing of different articulations. In this way, we can start testing right presently so as to gain proficiency with the test technique and to check whether our tasks work up until now. The test operating they can used for the multiple operator, relational operators ,binary logical operator, unary logical operator highest precedence, arithmetic operator, integer expressions, floating-point expressions (Park, 2017), and all kinds of mixed mode expressions on the highest precedence. When your compiler is fixed, you may remark out those print proclamations once more. Utilize a test program that contains work definitions, syntactic analyzer to distinguish and recuperate from extra language structure mistakes utilizing the semicolon as the synchronization token on the variable assertion.

Project improvement

Now let us test our program’s ability to detect syntax errors. Run the program and enter the line

For example,

$ 123 ++ 456

What error was detected? Can you see why?

$ 123 456

The problem is that when the program quits and detecting the synchrozation error correctly parse any syntactically correct program without any problem, the last token received from the lexer should be EBNF (Cifuentes Honrubia and Rodríguez Rosique, 2011). The parser they can contains the different function statement on first and last line message of the return variable types. Otherwise, the lexer was not at the end of the expression. However, the program does not check that this was the case. It simply quits after the production for E has been satisfied. Correct this by requiring that after satisfying the production for E, the token be EBNF in order for the parser to report that the input was accepted.

Reference

Cifuentes Honrubia, J. and Rodríguez Rosique, S. (2011). Spanish word formation and lexical creation. Amsterdam: John Benjamins Pub. Co.

LEXICAL COLLOCATION ANALYSIS. (2019). [S.l.]: SPRINGER.

Park, J. (2017). Syntactic complexity as a predictor of second language writing proficiency and writing quality.